Cyquential AI

Marrying the reasoning capabilities of Traditional AI with the generalization power of Modern AI

In Artificial Intelligence today, there exists a major problem: There is currently no way to allow AI systems to learn by human instruction alone. Current technology allows LLMs to talk to humans, and sometimes do tasks for humans, but anything that requires new material to be learned? Forget it, companies have to instead design and train models dedicated to doing the task desired. And while training a model to be able to do a task is still an effective way to solve the problem, it still requires hundreds of thousands to millions of dollars to develop, usually needing high quality data sets and lots of compute + man hours.

But what if there was a way to completely subvert this problem, to make training/learning as easy as if you were to teach a human, just by talking to them alone?

Meet Cyquential AI. A hypereffecient, human trainable Agent with full deductive and compositional reasoning + understanding cabilities, acting as drop in replacement for existing AI workflows. This is accomplished through the self building architecture, where neurons and synapses (forming of the repetition) form by themselves as opposed to being fixed hyperparameters.

Features

Independent Learning, done the human way

Cyquential AI learn without needing any labeled data or even a teaching signal. This means that learning can be done without needing to create any type of error function or ”correct answer”, meaning that involvement with the AI is very hands free. To train, simply instruct it with english, the same way you would teach any other person. No more expensive AI developer teams to handle development, retraining, weight freezing, or any type of work on the model, even when adding new data or tasks to learn. The learned representations are still distributed as in a neural network architecture, which creates preservation of all the useful capabilities that modern deep learning yields.

Inductive logic and few shot generalization with no black box

Since Cyquential AI architecture uses generative symbols instead of point neurons for information representation, introspection into the model becomes fully transparent. Users can see exactly how the AI is thinking, preventing the blackbox issues that have plagued modern AI for decades.

Very Low Compute Power, Easy Portability

Because Cyquential AI only activates nodes that are relevant to the current task at hand, and does not require computations through the entire network, the overall processing power Cyquential AI consumes is very low. The size of the network dynamic, and so knowledge can even be trimmed to isolate core tasks; additionally, nodes can utilize all previous information, so additional learned items take up minimal space.

How Does it Work?

A brief recap of AI History

In Artificial Intelligence, there have existed two paradigms: Symbolic Architectures (often known as GOFAI), and deep learning. Symbolic AI is great at doing deductive logic, but can't generalize (inductive logic), and can't learn from high dimensional input, ultamitely leading to them being unable to flexibly learn. In Contrast, Modern AI are really good at inductive logic, but struggle with deductive logic. However, since deductive logic is needed for learning, models must be designed to incorporate this deductive logic inside.

Taking a closer look, both appear to solve the problem the other struggles with. The industry has presented something called neurosymbolics for this, which is essentially an architecture made up of both symbolic and deep layers. However, these layers exist as separate units This begs the question: What if there was a way to combine both technologies, such that they are not separate units and integrate into each other.

Combining the best of both worlds: Symbolic Knowledge from GOFAI, and Distributed Representation from DNNs

The question described above is the question Cyquential AI tries to answer. Through a research process of 6 years, Cyquential AI is a completely new architecture that tries to do just that. It uses a new propositional learning hypothesis, called the Cyquential hypothesis, that has been brainstormed and engineered for over 6 years, redesigning symbolic AI in such a way that symbols are generated autonomously in the knowledge base from real time perceptual data, as opposed to being hardcoded in. The symbols generated represent task generalized object representations, and are created sequentially such that they build off each other.

Rethinking how AI Architecture works, from the ground up

Due to this, Cyquential AI becomes a completely unseen before self building architecture, where neurons and synapses form by themselves as opposed to being fixed hyperparameters. This functionality is what enables Cyquential AI to perform full continual learning, with very minimal catastrophic forgetting as no neurons are deleted or changed.

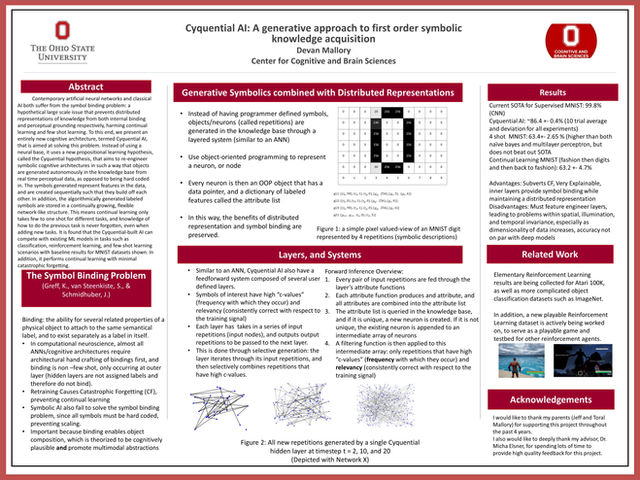

Formal Abstract

Contemporary artificial neural networks and classical AI both suffer from the symbol binding and grounding problem, an issue that prevents distributed representations of perceptual knowledge from internal object binding. Cyquential AI is a new type of symbolic model designed to subvert this problem. It uses a new propositional learning hypothesis, called the Cyquential hypothesis, that aims to re-engineer physical symbol systems in such a way that symbols are generated autonomously in the knowledge base from real time perceptual data, as opposed to being hardcoded in. The symbols generated often represent task generalized object representations, and are created sequentially such that they build off each other. In addition, the algorithmically generated labeled symbols are stored in a continually growing, flexible network-like structure. This means continual learning only takes few to one shot for different tasks, and knowledge of how to do the previous task is never forgotten, even when adding new tasks. It is found that the Cyquential-built AI can adequately perform one pass-through few shot recognition on the MNIST fashion dataset (65 percent accuracy), while using no deep learning or any external data. In addition, it performs continual learning to recognize MNIST handwritten digits, while still being able to recognize MNIST fashion simultaneously.

2022 Ohio State Undergraduate Cognitive Science Fest: Poster 1st Place